PlanVector AI Launches First Project-Domain Foundation Model PWM-1F, a Project World Model (PWM) and Temporal Causal Inference (TCI) Analysis Engine for Enterprise Project Platforms.

The first generation of “AI for projects” has mostly meant copilots and chat interfaces wired into existing systems. They make it easier to write and read around the work, but they rarely change how projects are actually controlled. The next wave looks different: domain-specific models that operate over project telemetry, not just documents. PWM-1F is PlanVector’s model for that layer.

The first wave of AI for projects

Over the last couple of years, most product and engineering teams have gone through the same cycle with AI. You wire a powerful general-purpose model into your product, give it access to your documents and tickets, maybe add retrieval over a knowledge base or data warehouse, and wrap it in a chat-style interface. Suddenly you have a copilot that can summarize meetings, rewrite status updates, answer “where is this file?” and “what did we decide last week?” It feels genuinely useful.

In the project world, that pattern looks very similar. You point a large language model at project plans, status reports, risk logs, slide decks, spreadsheets and sometimes BI dashboards. You add a bit of RAG on top of your warehouse or analytics layer. Project managers can ask questions in natural language and get back summaries, rewritten narratives and explanations of fields pulled from reports.

This first wave is good at one thing in particular: it makes the content around projects more accessible. It reduces the friction of reading, writing and searching. For day-to-day communication, that is a big step forward.

But if you push it toward the questions that actually decide whether projects succeed or fail, you start to see the limits. Ask “Where is risk really building, not just where the RAG fields are red?”, “Why is this project quietly eroding margin?” or “If we keep going like this, what does this look like in four weeks?”, and the answers tend to fall back to restating what is already in the documents. The model is fluent, but it is mostly rearranging the same surface information. It doesn’t really have a grip on how the project is behaving.

From documents to telemetry

That gap isn’t unique to projects. You see the same pattern in other domains that care about control and outcomes. A generic model plus RAG is very good at talking about the artefacts around the system. It is much less capable when you want it to reason over the system’s behavior itself.

In any serious control problem there is usually a second layer of data that matters more than the documents: telemetry. In trading, it is the time series of prices and volumes. In industrial systems, it is sensor readings and control signals. In healthcare, it might be vital signs or lab values over time. These are the signals you watch when you want to know not just what people said about the system you are investigating, but what the system is actually doing.

In projects, that telemetry takes the form of metrics that move together over time. Think of the way planned vs. actual cost evolves phase by phase; how backlog and throughput change as work accelerates or stalls; how margin, change orders and utilization interact; how risk indicators flare up or fade. A single snapshot of those numbers is helpful, but the real story is in the trajectory: how they have changed over the last few weeks or months, and how they have changed in relation to one another.

You can keep using language models to make your status reports, risk logs and emails easier to consume. That layer is still valuable. But if you want AI to answer control-type questions about projects – Is this stable? Is this quietly drifting? What is likely to happen next if we don’t intervene? – you need a model that takes that telemetry seriously. It has to read the metric histories as its primary signal, and treat the documents as supporting commentary rather than the main source of truth.

That is the space PlanVector is focused on: not another copilot over project documents, but a domain model that looks at how projects behave through their metrics over time.

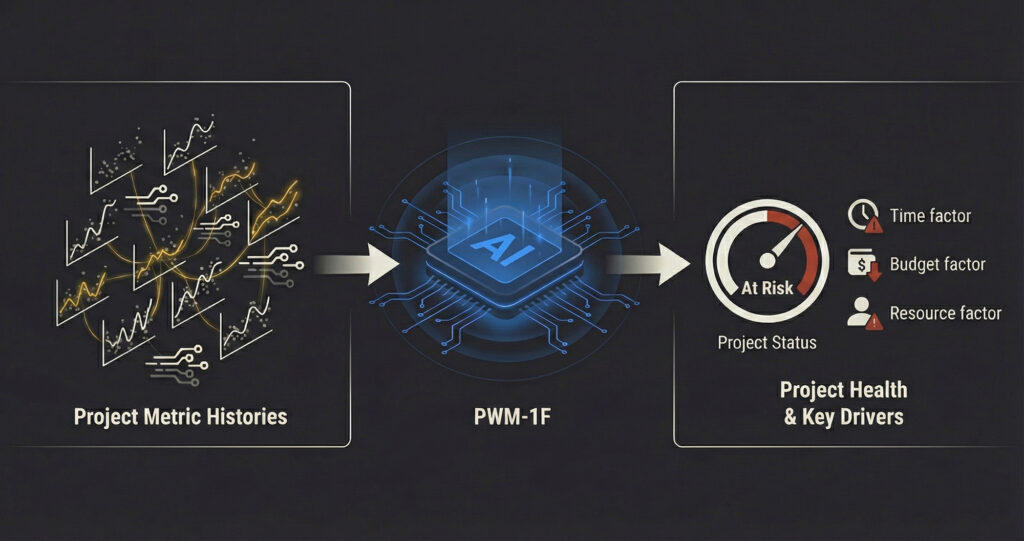

Where PWM-1F fits: a domain foundation model

PWM-1F is that domain model. At the simplest level, it is a model that reads a project’s metric history and turns it into a structured judgment. You give it a sequence of snapshots that show how key project metrics have changed over time, along with a task such as “give me a status”, “explain this margin drift” or “if nothing changes, where are we in four weeks?”. It comes back with an assessment of health, the factors that are helping, the factors that are hurting, and a short-horizon view when you ask for it.

You can think of PWM-1F as the project world model inside your project platform. Instead of treating a project as a pile of files or a flat table, it maintains an internal picture of how the project has been evolving, where it currently sits in terms of health, and what is driving that state. The project’s metric trajectory is the backbone of that picture.

The core capability inside PWM-1F is what we call Temporal Causal Inference, or TCI. In our context, that is not an abstract research term; it is the concrete mapping the model has learned to perform. PWM-1F can read a project’s metric history over time and convert it into a causal judgment: a current health assessment plus the positive and negative drivers that best explain how earlier changes led to the current state. That “metric trajectory in” to “health and factors out” mapping is the main thing the model has been trained to do. It is what makes it possible to feed it, for example, a block of weekly metrics and get back a coherent, grounded read on the project, rather than a generic summary.

In AI stack terms, PWM-1F sits between your foundation LLM and your project data. The general-purpose model is still there to handle language, interaction and tools. Retrieval and warehouses are still there to organize and deliver raw data. PWM-1F’s role is different: it is the project-domain model that reads the telemetry – the metric histories – and maintains a consistent understanding of project health and drivers that your analytics, agents and workflows can build on.

What is a Domain Foundation Model?

A domain foundational model (or domain-specific foundation model) is a large-scale, general-purpose AI model that has been specifically pre-trained or fine-tuned on a massive corpus of data from a particular field or industry (the “domain”), such as healthcare, finance, law, or projects.

Inside PlanVector, the model that does this work is called PWM-1F. We think of it as a foundational model for the project domain (i.e. Domain Foundation Model). It is a project world model that sits underneath agents and analytics and gives them a consistent understanding of how projects behave.

What you can ask it to do

Once PWM-1F is connected to your platform, you don’t talk to it in equations. You talk to it in normal project language, and it uses the metric trajectory underneath to ground its answers.

A very common pattern is to ask for status, but in a way that goes beyond repeating fields. You send the recent history of metrics and ask, “Give me a project status based on this history.” PWM-1F reads the trajectory, decides how healthy the project actually is and explains that judgement in terms of concrete drivers and time periods. Instead of “Green on scope, Amber on schedule,” you get something closer to “Overall health is fragile: schedule and margin are being held up by a few strong weeks, but underlying workload and risk patterns look unstable for the next phase.”

You can push further into trend and root cause. For example: “Explain the trend and root causes behind the margin change in the last six weeks,” or “Why has schedule reliability deteriorated recently?” In those cases PWM-1F focuses on the parts of the history that matter most for that signal. It picks out which shifts in which metrics acted as negative factors, which countervailing movements helped, and how this combination resembles patterns it has effectively seen before. The result is an explanation that is anchored in the actual behavior of the project, not just a rephrasing of a variance report.

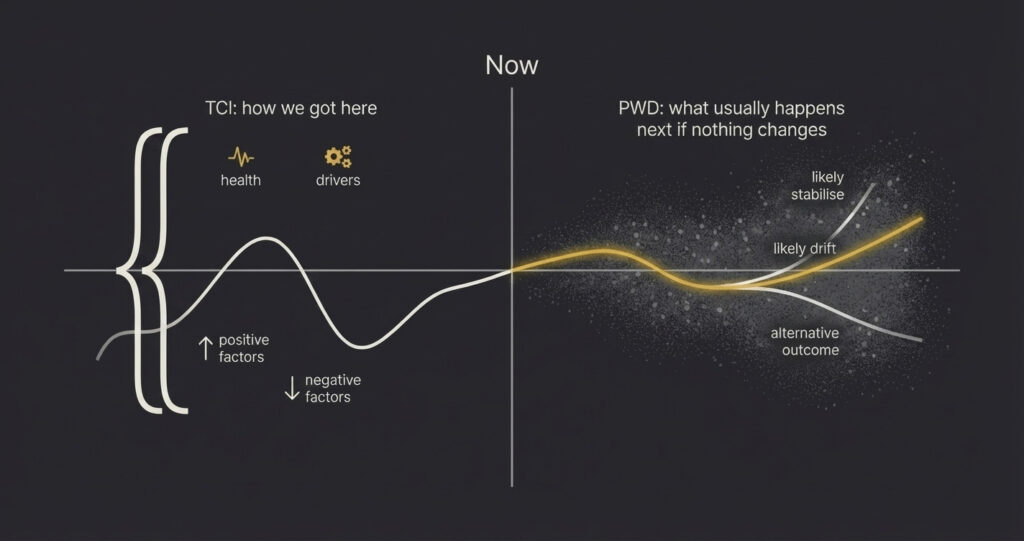

Short-horizon questions are where the “world model” aspect starts to show. If you ask, “If nothing significant changes, what is the likely state of this project in four weeks?”, PWM-1F answers based on how similar trajectories tend to evolve from this point, not by drawing a straight line through one metric. Over many scenarios it has developed an internal sense of which patterns usually stabilize with minor intervention, which tend to drift further, and which are early signs of more serious problems. We refer to that internal sense of how project states move over time as Project World Dynamics. TCI is about understanding how the project ended up where it is; PWD is about understanding where it is likely to go next if we stay on the same path.

You can then use that same internal state as the anchor for “what if” questions. Rather than trying to simulate every detail, PWM-1F can reason from the health and drivers it has inferred. “If we relieve overload on this phase, what usually happens to schedule risk in projects like this?” “If we push one milestone but protect a critical resource, does that reduce the chance of margin erosion?” The answers are not magic; they reflect learned patterns from many trajectories. But they give teams a structured way to think about options, grounded in more than intuition alone.

A bare look at the model

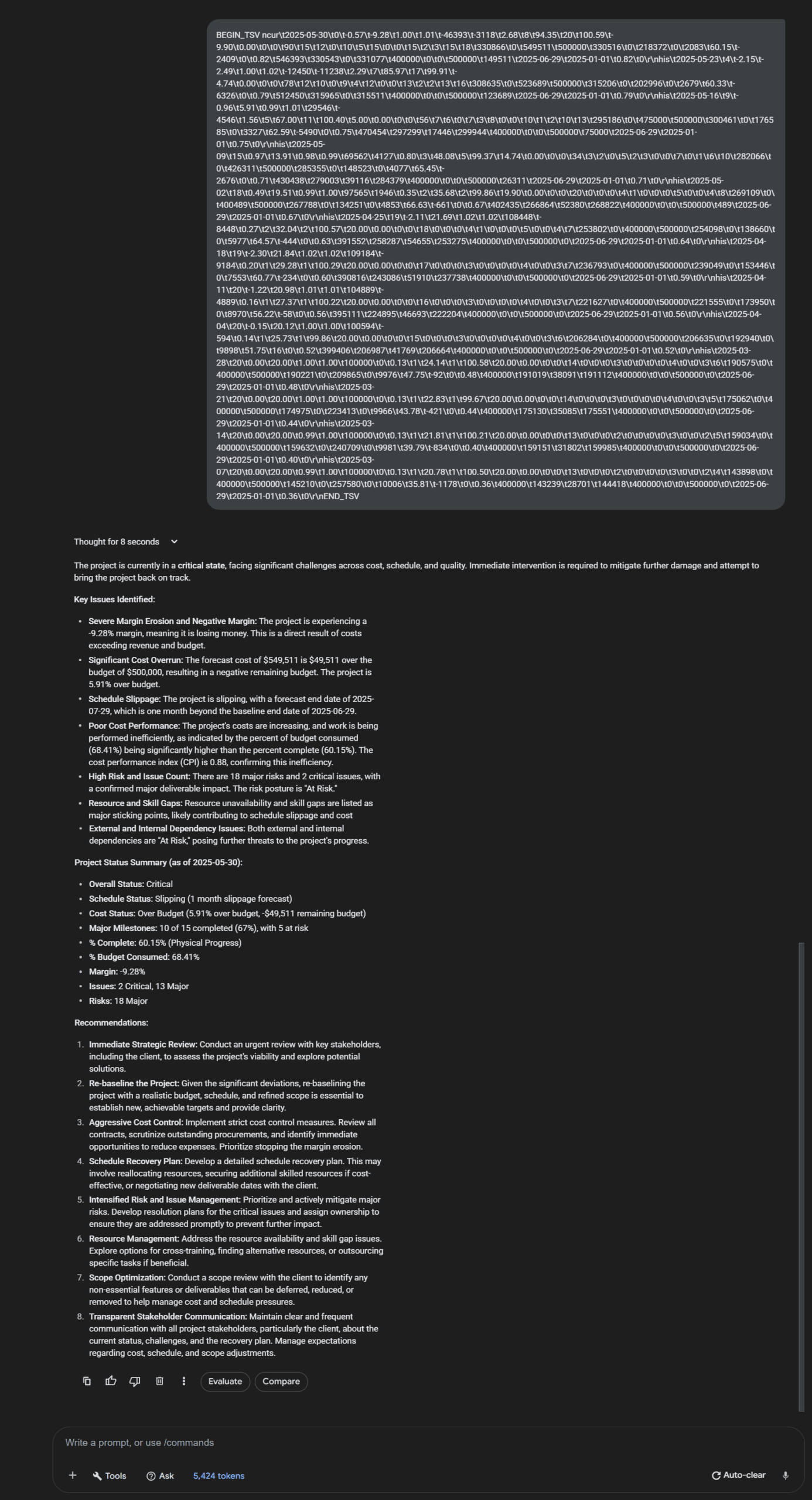

It can be helpful to see what this looks like before you surround it with a full product experience. One of the simplest tests we use internally is a stripped-down run in Vertex AI Studio (see below). There is no system prompt, no retrieval, no orchestration, no tools – just PWM-1F running on top of the base model, with a block of time-series project metrics as input.

The metrics block is a compact, tabular dump of a project’s history. Each row represents a point in time; each column is one of the project metrics the platform tracks. From the model’s perspective, that block is the trajectory: it is the only description of how this project has actually behaved.

There is no extra context, no row headers, no hint about specific risks or issues. The model has to work purely from its post-training on metric history and its internal world model.

The response looks very different from a generic summary. It states an overall view of health, explains which parts of the trajectory led to that judgement and separates positive from negative drivers. It might point out that margin has been quietly eroding despite apparently stable revenue, that schedule reliability has worsened since a particular point, that workload and risk are building in a specific phase, and that the combination of these patterns resembles projects that required corrective action. It will then suggest practical moves that match the situation it has inferred.

That kind of bare-metal test is not how customers will interact with the system, but it is a good way to see the core behavior without any product scaffolding. It makes it clear that the model is not just paraphrasing a status slide; it is actually reading the trajectory and applying Temporal Causal Inference and Project World Dynamics to reach a position.

PWM-1F Project-Domain Foundation Model Example

Why This Matters: This project-domain foundation model enables capabilities well beyond the AI agents of today that are based on generic LLM foundation models. By embedding this engine into a project management system, augmented with specific instructions, orchestration, and tool access, our customers can create an expert partner that doesn’t just summarize text or data, but truly understands project execution.

Metrics first, then context

In a real platform, PWM-1F does not live in isolation. It is part of a larger architecture that includes your own data model, tools and user experience. One of the key design choices in PlanVector is that metrics are always the starting point.

The reason is simple. Metric histories are the most compact, consistent way to describe how a project has been behaving. They cut across the noise of individual documents and comments, and they are available in every serious project environment, even if the exact names and calculations differ. By starting from that trajectory, PWM-1F can form an initial view of health and drivers that is comparable across projects and portfolios.

From there, additional context comes in as needed. If a question calls for more detail – for example, understanding why risk is building in a specific phase, or why throughput has stalled in one workstream – the model can use platform-provided tools and APIs to fetch focused slices of information. That might be a subset of tasks and dependencies, a set of risk entries linked to the project, the structure of a work breakdown, the load on key roles, or current material delivery dates.

The typical interaction loop looks like this. The platform sends recent metric histories for one or more projects along with a task. PWM-1F runs its core TCI pass, infers health and drivers, and returns an initial assessment. If the task or the uncertainty in that assessment suggests that more context is needed, the model triggers calls back into the platform to retrieve specific supporting data. It then refines its explanation and recommendations based on both the metric trajectory and the extra structures.

Keeping metrics as the primary signal and using other data selectively has two practical benefits. It makes the system’s behavior more stable and predictable, because every project is anchored in the same kind of trajectory. And it keeps the integration surface clear for platforms: you map your metrics once, expose a small set of tools for additional context, and let PWM-1F decide when it actually needs to look deeper.

At a high level, how PWM-1F is built

This post is about what PWM-1F does, not a about how we built it, but it is worth saying a little about what sits under the hood for anyone making platform or architecture decisions.

In AI terms, PWM-1F is a project-domain foundation model. It does not replace a general-purpose foundation model; it sits on top of one. Today that backbone is Google Gemini. The general model is responsible for language, interaction and tool use. PWM-1F is the specialized layer that has been adapted to the project domain and trained to treat metric histories as its primary signal.

Rather than learning from live customer portfolios, PWM-1F is trained on a combination of structured project management knowledge and synthetic project scenarios generated by a proprietary data engine. The goal is straightforward: expose the model to a wide range of realistic project behaviors without copying any specific customer’s data. Real project portfolios are used to validate and refine assumptions and to test how the model behaves, not as direct training material.

Across many scenarios, PWM-1F learns to recognize patterns in multivariate metric trajectories and to map them to consistent judgements of health and drivers. That is where Temporal Causal Inference and Project World Dynamics come from in practice. They are not hand-written rules; they are properties of a model that has been repeatedly trained to answer questions like these “given this history, what is the true state of this project, what is pushing it there, and what usually happens next from here?”

For AI teams, it can help to think of PWM-1F as a multi-modal layer on top of the base model. Metric histories behave like a structured time-series modality. Project artefacts such as tasks, risks and work packages are accessed through tools as additional structured inputs when needed. Text remains the medium for tasks, explanations and plans, plus via tools, charts etc. PWM-1F is the part that connects the telemetry to a stable internal state the rest of your stack can build on.

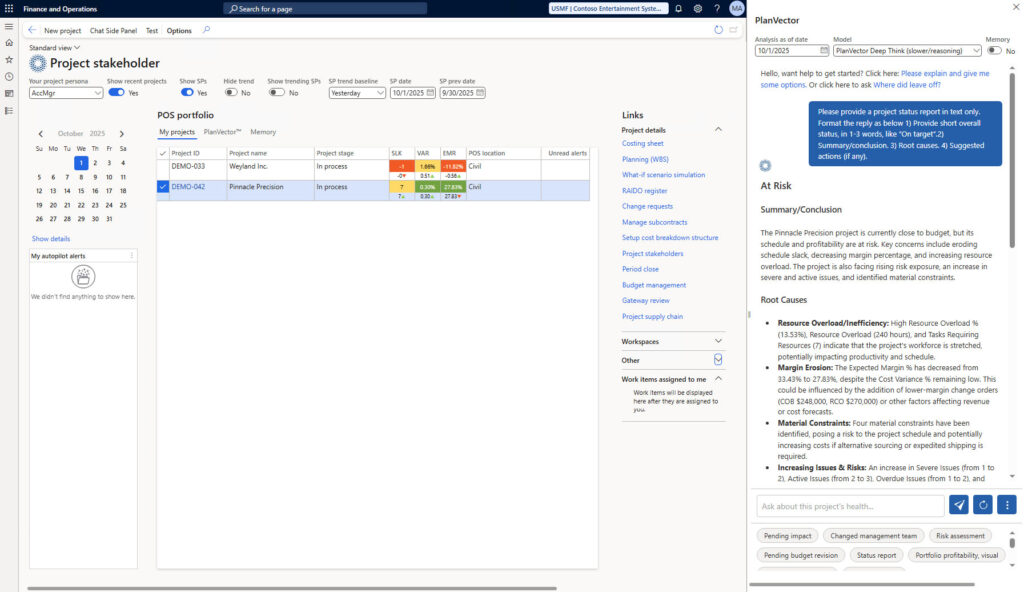

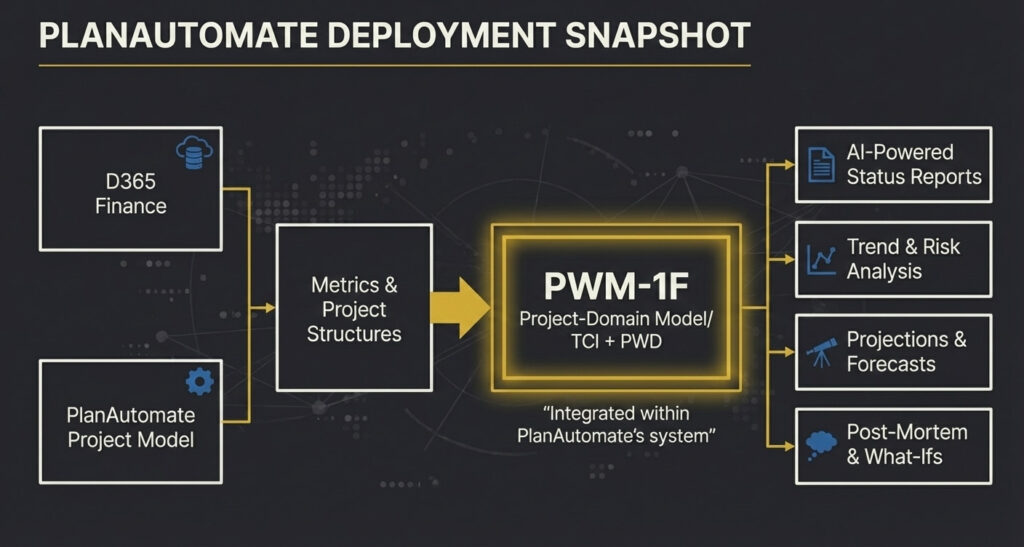

What this looks like in production: PlanAutomate on D365

All of this becomes more tangible when you see PWM-1F in a real product. PlanAutomate, a Microsoft Dynamics 365 solution provider focused on complex project-driven businesses, is the first platform to run PWM-1F in production. Inside their Enterprise Project Management environment on D365 Finance, PWM-1F shows up as an AI analyst that is tightly integrated with their own data model and user experience.

PlanAutomate feeds PWM-1F with regular project metric histories drawn from D365 Finance and from its own project structures. On top of that feed, they expose a set of capabilities that match how project organizations actually work. When a user asks for a status report, PWM-1F analyses the underlying health of the project and generates a narrative that reflects real momentum, not just the current RAG settings. When someone wants to understand how a project is trending, the model reads the direction, speed and acceleration of key metrics and explains whether the project is stabilizing, quietly drifting or heading onto a risky path.

For forward-looking questions, PlanAutomate leans on PWM-1F’s view of Project World Dynamics. The model analyses current performance and provides near-term projections for budget, timeline and scope, so teams can see where they are likely to land if they do not intervene. When a variance appears in margin or schedule, PWM-1F helps explain it in terms of underlying drivers rather than just numbers. And when risk is the focus, the model looks beyond static risk logs, scanning trajectories for early patterns that have historically preceded trouble.

After projects close, PlanAutomate can use the same capabilities for post-mortems and counterfactuals. Instead of only recording what went wrong, teams can ask what combination of factors led there and explore how different decisions might have changed the outcome. To their customers this appears as an experienced project advisor embedded in the system they already use. Under the surface, it is PWM-1F doing the metric-trajectory reading, causal reasoning and explanation that powers those experiences.

Learn more about PlanAutomate’s roll out of PlanVector AI.

For product and AI teams who want to go deeper

PWM-1F is aimed at teams that build project, ERP, PPM and PSA platforms. Whether you are just getting started with AI or have already explored the first wave of copilots and RAG and know where it falls short, contact us to learn more about adding our project-domain model into your stack.

PWM-1F treats projects as behaviors over time, not just collections of documents. It reads metric histories as telemetry, uses Temporal Causal Inference to infer health and drivers, and applies Project World Dynamics to reason about where trajectories usually lead. It runs on top of a general foundation model, but its behavior has been shaped specifically for the project domain and it does not train on your customers’ projects.

At this stage, the details of the training regime, synthetic scenario design and evaluation live in technical briefings rather than public posts. If you want to understand how your existing metrics and structures would map into PWM-1F, what an integration would look like in your architecture, or how our model could fit with the AI investments you already have, contact us for a conversation. Bear in mind, some subjects we cannot discuss except under NDA.

What this post is meant to convey is the core idea: PWM-1F is not a prompt template on a generic model. It is a project-domain foundation model built to read project telemetry and give your platform a consistent, causal understanding of how its projects are actually behaving.

Next Steps: Contact PlanVector for a demonstration and further investigation.